I’ve been wondering about this for a few years now: will machines be trained to spot and identify cars faster and more accurately than humans? Thanks to a link to an article sent me by CC reader Carlton C., it’s confirmed. A system called Convoluted Neural Networks (“CNN”) has been trained by scientists to identify cars on Google Street View in 0.2 seconds, as compared to some 10 seconds by human car spotters, who of course had to be hired to train the system.

And now it’s possible to confirm the demographics and political persuasions by just that, meaning quicker and cheaper than other existing means. As in: a preponderance of trucks means the neighborhood leans Republican, whereas a preponderance of sedans implies Democrats. And more Hondas and Toyotas means Asians. Chrysler, Buick, and Oldsmobile are positively associated with African American neighborhoods. Pickup trucks, Volkswagens, and Aston Martins are indicative of mostly Caucasian neighborhoods.

Surprised yet? Not me. But can it identify a 1959 Mercury?

Here’s the essence of the process:

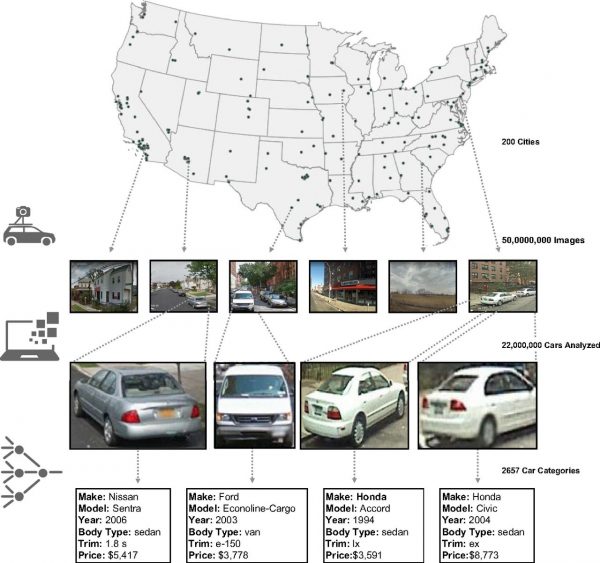

We demonstrate that, by deploying a machine vision framework based on deep learning—specifically, Convolutional Neural Networks (CNN)—it is possible to not only recognize vehicles in a complex street scene but also to reliably determine a wide range of vehicle characteristics, including make, model, and year. Whereas many challenging tasks in machine vision (such as photo tagging) are easy for humans, the fine-grained object recognition task we perform here is one that few people could accomplish for even a handful of images. Differences between cars can be imperceptible to an untrained person; for instance, some car models can have subtle changes in tail lights (e.g., 2007 Honda Accord vs. 2008 Honda Accord) or grilles (e.g., 2001 Ford F-150 Supercrew LL vs. 2011 Ford F-150 Supercrew SVT). Nevertheless, our system is able to classify automobiles into one of 2,657 categories, taking 0.2 s per vehicle image to do so. While it classified the automobiles in 50 million images in 2 wk, a human expert, assuming 10 s per image, would take more than 15 years to perform the same task. Using the classified motor vehicles in each neighborhood, we infer a wide range of demographic statistics, socioeconomic attributes, and political preferences of its residents.

In the first step of our analysis, we collected 50 million Google Street View images from 3,068 zip codes and 39,286 voting precincts spanning 200 US cities (Fig. 1). Using these images and annotated photos of cars, our object recognition algorithm [a “Deformable Part Model” (DPM) (11)] learned to automatically localize motor vehicles on the street (12) (see Materials and Methods). This model took advantage of a gold-standard dataset we generated by asking humans (both laypeople, recruited using Amazon Mechanical Turk, and car experts recruited through Craigslist) to identify cars in Google Street View scenes.

Recruited from Craigslist? Why not CC?

I’m impressed, but 2,657 categories of automobiles does leave out some. I’d be curious to see just what that list comprises. And how well this machine does in some really CC-reach neighborhoods.

How did I miss this opportunity, I’ve been training for this since I was 3! 10 seconds is for amateurs.

Me too!

Same here!

Same here! I’ve been telling my wife and friend for years that I have a perfectly good super power going to waste, just waiting for something like this! And now I ive been replaced by a computer before I even had a shot!

In fact another study found that those of us who are expert car spotters use the same part of the brain used for recognizing faces.

“That area is called the fusiform face area, and for a long time people thought that its only job was to recognize faces. But this study found “no evidence that there is a special area devoted exclusively to facial recognition. Instead, they found that the FFA of the auto experts was filled with small, interspersed patches that respond strongly to photos of faces and autos both.”

I’ve got to disagree with that one. I am absolutely terrible at recognizing faces, to the point where I rarely greet people by name because I’m so often wrong. But I can instinctively spot the difference between an Aries and a Reliant at 100 yards.

But really… I’d love to have been a part of that study:

“…a team of Vanderbilt researchers report that they have recorded the activity in the fusiform face areas of a group of automobile aficionados at extremely high resolution.”

I’m with Eric703 here … bad with people’s names, even worse with faces, but I’m pretty good with cars. Though I’m not sure about Aries vs Reliant, unless the Reliant is a Robin. But I can remember the only K Car I’ve ever driven, over 30 years ago, and it was a Reliant.

I’m not particularly good at recognizing faces either. Maybe my FFA is all filled up with cars!

Same here as well. My wife often says if I can remember so much trivia about cars why can’t I remember this, that, or the other about people. Maybe people are less interesting…..?

Same here…. I can recognize cars, but not as good with faces…

When I was a kid my parents would actually be slightly annoyed at me for knowing the make/model/year of any random car I’d see, yet not have the slightest recollection the name of relatives I’d previously met when we’d be on family trips.

I don’t think I’m too bad bad with faces though, I feel like I never had an issue recognizing someone, but remembering their names is excruciating!

+1: I’m good with facial recognition (and car recognition), but terrible with names. If I’ve met you, then I remember your face, but putting a name to it – not so much. But then I’m in Engineering. Remembering names is a Salesperson or HR Person skill. ;o)

Likewise, and that’s a fact. I’ve frequently met people who I know and they know me, usually by name, and I spend those agonizing minutes trying to dredge up their name without embarrassment. I’m better at cars, plus they aren’t insulted if I don’t remember their names.

I have said to more than one person: “I will probably forget your name, I might forget your face, but I will *never* forget your car.”

+1 😀 More people have recognized me by my car than ever have by my face.

It does say in the article the ID system only goes back to 1990. So lots of CC’s would be missed. But still fascinating what the algorithms can conclude just from the vehicle mix. Great tool for political gerrymandering.

Frightening times, its 1984!

Skynet smiles…

Big Brother is watching you (and your driveway)!!!

Wild! But upon reflection not surprising.

I wonder if Google has been doing this privately for years. Their business model is to learn things from all the data they collect that can be used to better sell advertising.

By the way, don’t worry about these things becoming our robot overlords. Just because a computer can recognize cars and other things in pictures as well as we can, or even drive a car safely, doesn’t mean it’s capable of anything remotely like intelligence or understanding. In a general sense it’s modeled on the visual part of the brain, which is relatively well understood. But what real brains can do depends on all that other gray matter, which we know much less about. Your dog is far smarter than any computer, and will be for a very long time to come.

It does well on pictures of whole cars, but I wonder what this system would do with a CC Clue?

Diagram below from “An Intuitive Guide to Convolutional Neural Networks”.

I guess, once you’ve got rid of the 2,657 ‘easy’ ones that just leaves the more interesting and unusual ones for us to sort out!

I think I can beat them on Studebakers. But perhaps not on Sentras and Outbacks.

So how come if a living person makes racial generalizations from cars in a neighborhood it’s likely to be called racist but if a machine does it based on human training it’s not? I’m confused.

Because the authors are from elite academic institutions and are therefore beyond reproach. Remember, they know better than you or me.

That aside, what’s the point? The report claims that the car-based inferences can spot trends quicker than demographic information such as from the Census Bureau’s American Community Survey. I’d like to see an example of that. Census data is free, and easily downloadable in huge quantities to perform demographic analyses. And for major categories like race, income, etc., it’s fairly accurate.

So a sample of cars is supposed to do better than that? First, if they’re counting cars driving on a major street, that’s not very accurate to measure neighborhood demographics, since most will be driving from elsewhere. Second, I doubt that any neighborhood’s inventory of vehicles would change quicker than Census data.

Plus, the demographics of car buyers can change quickly too, so the base data would need to be continually updated.

Despite its novelty from a car-enthusiast perspective, to me this seems like the work of a bunch of academics with too much time on their hands.

I actually work for the Census Bureau at their headquarters in Suitland Maryland. Suitland is one of the worst areas in Maryland and makes the bad parts of nearby DC seem good by comparison

However if you were to drive around the neighborhoods in Suitland you will see an abundance of late model luxury cars making it look like the area is wealthy instead of being a poor and miserable place to exist.

By contrast, driving through Clarksville Maryland(one of the most wealthiest areas in the country) you will see large numbers of Hondas, Toyotas and pickup trucks

“The Millionaire Next Door” made a similar observation.

Written in the late 90s, it noted that F-150s, Camrys, Caprices, and Tauruses were much more popular with the affluent than one would think, and BMWs and Porsches were less.

However, Mercedes and Lexus were well-represented.

I still have that book, and the Panther platform lovers on here will be happy to know that the Crown Vic topped the list.

“Because the authors are from elite academic institutions and are therefore beyond reproach. Remember, they know better than your or me.”

Methinks someone’s got their tinfoil hat on too tight. Where did they ever explicitly state this?

“So how come if a living person makes racial generalizations from cars in a neighborhood it’s likely to be called racist but if a machine does it based on human training it’s not?”

Collecting factual data, by computers with cameras or by humans on foot, and analyzing it to learn the fact that Borgwards and Edsels are more likely to be driven by Upper Slobovians, is simple demographics. Saying Borgwards and Edsels are driven by inherently inferior or superior beings would be racist.

MidePDX for the win!!

I’ll take Reason and Logic for $200, Alex.

MikePDX is correct. Stating a fact is just stating a fact. Making assumptions base on those facts is where the problem lies.

Ol’ John Henry loses yet another job to automation

So the truth comes out!

CNN is not Cable News Networks!

It’s Convoluted Neural Networks!

Now it all makes sense 😎

(Sorry..off topic but I just had to get there first!)

+1

I did not realize they were enough Oldsmobiles still around to sway the outcomes of neighborhood demographics. The few Oldsmobiles in my neighborhood (Washington County, Oregon) are owned by Caucasians and Hispanics.

Thank you for sharing this Paul.

Interesting. Does this tell google information that census data and voting records don’t already? Not to mention car registrations.

Personally I would be interested in seeing vehicle mix stats for various areas, but more for it’s own sake than to try to infer a lot about the owners.

My job involves going into a lot of apartment complexes. I’ve found that I can speculate pretty accurately on the socioeconomic and cultural characteristics of the residents from what’s in the parking lots.

If they think Hondas and Toyotas imply Asian neighborhoods, they’re just dumb. In fact that’s a crudely racist preconception with no factual basis.

Hondas are solidly white Democrats, and Toyotas are everywhere. People buy Toyotas because they want a car that runs.

Not surprisingly, you got this wrong (again). It’s not simply the presence of Toyotas and Hondas; it’s the relative density of them. Having been in some heavily Asian neighborhoods in LA and the Bay Area, I can assure there is a very real correlation here.

The whole point is that this is based on fact.

So how is the fact that Asians do indeed prefer Hondas and Toyotas and most won’t be caught in a US brand car is racist, but the fact that Chryslers, Buicks and Oldsomobiles show up in black neighborhoods isn’t?

Accord, civic, wrangler and Silverado. I wonder what cnn would conclude from my driveway?

White.

I would say that you are someone who likes cars. One for every need and want. You respect the Hondas but like the Chevy best. Jeep is a present to yourself. Caucasion, lean conservative but are open-minded. Blue collar but a little monied. Clean-lined goatee or tidy beard. Wear jeans but not cheapest. How’d I do?

Geez you guys are pretty good. No facial hair though because it comes in gray. Also white collar.

I took this class a while back. If you’re interested in neural networks it’s a great start.

https://www.coursera.org/learn/machine-learning

If they hired people that trained the thinking machine that an 07 and 08 Accord are only distinguished by different trail lights, or that a 2001 F150 “LL” and a 2011 “F150 SVT” are hard to distinguish, the civil libertarians can rest easy. The 08 Accord was the first year of a completely new model, remembered as the largest, most Buick-like Accord generation (and categorized by the EPA as a fullsize). And I have never heard of a 2001 F150 Supercrew “LL,” and am 100% certain they never made a 2011 F150 SVT. And besides, my mom could tell a 2001 from a 2011. That’s not detail recognition, it’s 3 generations of seperation.

Yup, I’m calling “Bulk wrap!” for the same reason you are. And even if we give ’em the benefit of the doubt—oops, typo, they meant ’06/’07 or ’08/’09—they’re still full of beans. I defy anyone, human or not, to discern an ’06 Accord from an ’07, or an ’08 from an ’09, without scrutinising the VIN (or cheating and looking up the car by licence plate number).

The certitude they throw around is totally belied by the four examples they give. Oh, that’s definitely, definitely an “’03 Civic”, sez the super-smart Neural Net? An ’03, it’s totally fer certain, and not a visually identical ’01, ’02, ’04, or ’05? Uh-huh. And the same goes for that “’06 Sentra”, which the omniscient Neural Net is absolutely sure isn’t an ’04 or an ’05.

It gets even richer with that Ford van, which is identified as a 2003(!!! Is too is too is too times a finity; we know because Neural Net—hallowed be its name—said so!) “Econoline Cargo”, in the “e-150 trim”. Um, no. BZZZT thanks for playing, but there’s nothing such as an ’03 Econoline. That name was dropped after 2000 in the US to become the E-series: E-150, E-250, E-350, etc. Moreover, those vans had the pictured front end from ’92 to ’07. Some of them had composite headlamps and others had the rectangular sealed beams as this one does. Some of them had turn signals with an amber lens and a clear bulb; others had a clear lens with an amber bulb. Grilles came in grey, black, and maybe a shade or two of argent. All these parts are directly interchangeable, and regardless of HRH Neural Net’s prognostications, the van is between 12 and 27 years old. Y’really think Jim’s Plumbing gives a crap if the van gets repaired with a black grille and headlight bezels rather than the original grey ones? No, they care the van gets back on the road as quickly and inexpensively as possible. So does HRH Baron Von Neural Net have a secret decoder ring that lets it see invisible non-differences between an ’03 and an ’07 and a ’99, and lets it know all the parts are original?

Then there’s that “1994” Accord, which for some strange reason is actually a 1995-’97. Perhaps it’s had the later trunk lid and taillamps swapped on, or perhaps the Neural Net and its programmers are full of manure.

Yes, I know this is a rant. No, I’m not sorry. Crap like this pisses me off.

…by “crap like this”, I primarily mean academic papers full of handwaving and exaggerated claims built on the knowledge equivalent of soggy landfill. But I also mean dumb computer tricks that we’re just supposed to accept as a marvelous part of our wonderful future—much like our refrigerators and vacuum cleaners and cars being on the internet.

Does Google have x-ray vision so they can see into garages? I would hate to see the computer to get the wrong impression of me because of the 20-year-old pickup in the driveway versus the 3-year-old convertible in the garage.

So my wife’s VW in the garage belongs to a Democrat, and my extended cab pickup belongs to a Republican? Well, one out of two is right. Still, interesting stuff, and I think the critics are just jealous they don’t get paid to do this. Or get college credit for these classes: https://revs.stanford.edu/course?field_academic_year_tid=All&page=3

Jealous? Lulz. I would be severely embarrassed to have my name associated with a paper blithely describing a demonstrably severe failure as a triumphant success.

I research my vehicle purchases for reliability. Once they pass that test, it’s all about price. Three cars in my driveway from three different corporations. Analyze that Google.

What’s Google got to do with it? This study just used Google street view as their input.

Now what Google did analyze was your car research and they sold ads to companies based on your searches.

If you dig deeper into the article it says that the computer only correctly identified the vehicle 1/3 of the time. I’d give that a failing grade in general. Of course how close their answers are could mean it does a better job than the numbers suggest. For example the Econoline in the picture the only way to tell the exact year is to look at the VECI or VIN Labels and w/o a picture of the badges it is impossible to tell whether it is a 250 or 350.

The other major problem with their methodology is that the broke up the training group by first letter of the counties selected. That means in some cases there is zero local data being used and as us car people know the popularity of particular makes and models does vary significantly in different parts of the country. For example the Subaru Outback is the best selling vehicle in my and several other states despite the fact that it is not the overall best seller in the US.

I wonder what their sample set is. I’ve played around with toy networks and the sample I used was a standard one from Stanford where all the images are 96×96. (For reference a frame of HDTV is 1920×1080.) Good enough for “car” vs “flower” but not “Honda accord” vs “Chevy Malibu.”

This is what I’ve been waiting for.

That’s not a 1994 Accord (2nd from right), the taillights are wrong – they’re from the 1996-7 mid-cycle refresh.

I wonder how many areas results would be skewed by the number of work trucks present on the streets. I know that when I’m working on a house in some of Atlanta’s richest neighborhoods I’m always amazed by the number of work trucks and vans parked on the street, mine included. Many of these houses are set far back off the street and the owners car are not visible from the road. It would be interesting to see the results if one of these scans was done on Tuxedo Road in Atlanta. Some of the most expensive and beautiful houses in the city, but the road is always filled with plane Jane white work trucks like my 03 Ram ST snooze mobile.

Good point, particularly in certain older neighborhoods with large enough lots to support off-street parking, and no HOA rules that mandate off-street parking.

Where I live is actually a number of small towns that have grown together over the past 30 years or so, and the older neighborhoods surrounding the four largest “downtowns” are all in various stages of renewal and gentrification. After 6:00pm, there seems to be some correlation between the frequency of parked cars on the street and in driveways, and the condition of the houses and the upkeep of the yards surrounding them. If I could peer inside of the houses, I’d bet that the houses NOT surrounded by vehicles have a higher frequency of solid surface countertops.

What happens when most of the residents park their cars in the garage or are away while the Amazon van is making a delivery.

Does this skew the scoring of the neighborhood???

You mean the Amazon Altima or Civic?

All I’ve got to say is they need to tune in their value-estimator… ’04 Civic is worth $8700?!

In my neighborhood, there’s lots of motor boats on trailers and RVs parked in the driveway while the owners are away at work during the day.

I wonder how these influence the (credit) score of the neighborhood.

You bring up a good point that the google street view vehicles operate during the work day when most people are likely also at work so their vehicles are not in their neighborhood, and even if they are at home their car may in the garage.

I bet I could beat “The Machine” (anyone ever see “Person of Interest”?) identifying Impalas from ’58 to ’76… heck, make that all full size Chevys from about ’52 to ’76*…

…but then I’ll bet everyone on these pages could do that, at least those of us of a certain age.

* Things get blurry for me after the B-Body downsize in 1977.

That’s alright; I’m pretty sharp with the ’77-’90 Chev B-bodies, and I’m sure there are those sharper than I.

Are we convinced that despite the description the images aren’t being crosschecked by registration tag data? It’d be simple enough, after all, each pictured vehicle has a tag on prominent display. Today such data sharing is everyday wide-open stuff.

Try it for yourself:

Parts Finder

Part Search

Vehicle Lookup

License Plate Lookup

https://www.fmmotorparts.com/fmstorefront/federalmogul/en/USD/catalog/partsFinderLicensePlateLookup

I can claim the dubious distinction of having been one of the “humans” who assisted with this project, by answering a craigslist ad back in the spring of 2014. I was unemployed at the time, and it was a relatively easy way to make a few bucks during my copious free time. I ended up doing maybe ten hours a week for about four weeks.

While I tend to agree with Mr. Stern’s assessment of “Bulk Wrap” on this subject, I believe I can get a good idea of an area’s economic health by looking for the oil drip spots in the parking lot of the Wall Mart. Less oil = more money and v.v.

Why not just buy DMV registration lists from the states. That is not as techno-wow(TM) interesting, but probably more accurate.